Nari Dia 1.6B TTS Model Review

I recently got the chance to try out the Nari Dia 1.6B Text-to-Speech (TTS) model from Nari Labs, which many people have been promoting online. It’s available on Hugging Face Spaces, and they say it offers full control over scripts and voices.

Naturally, I was curious. I wanted to see how good it actually is — not just based on marketing but based on real-world usage. So, I decided to run a few tests, record my experience step-by-step, and share everything with you in this article.

Getting Started with Nari Dia TTS

To use the model, you’ll need to visit its Hugging Face space. One important thing to note: You must be logged in. Without being logged in, the model usually doesn’t allow you to generate output. That’s your first checkpoint.

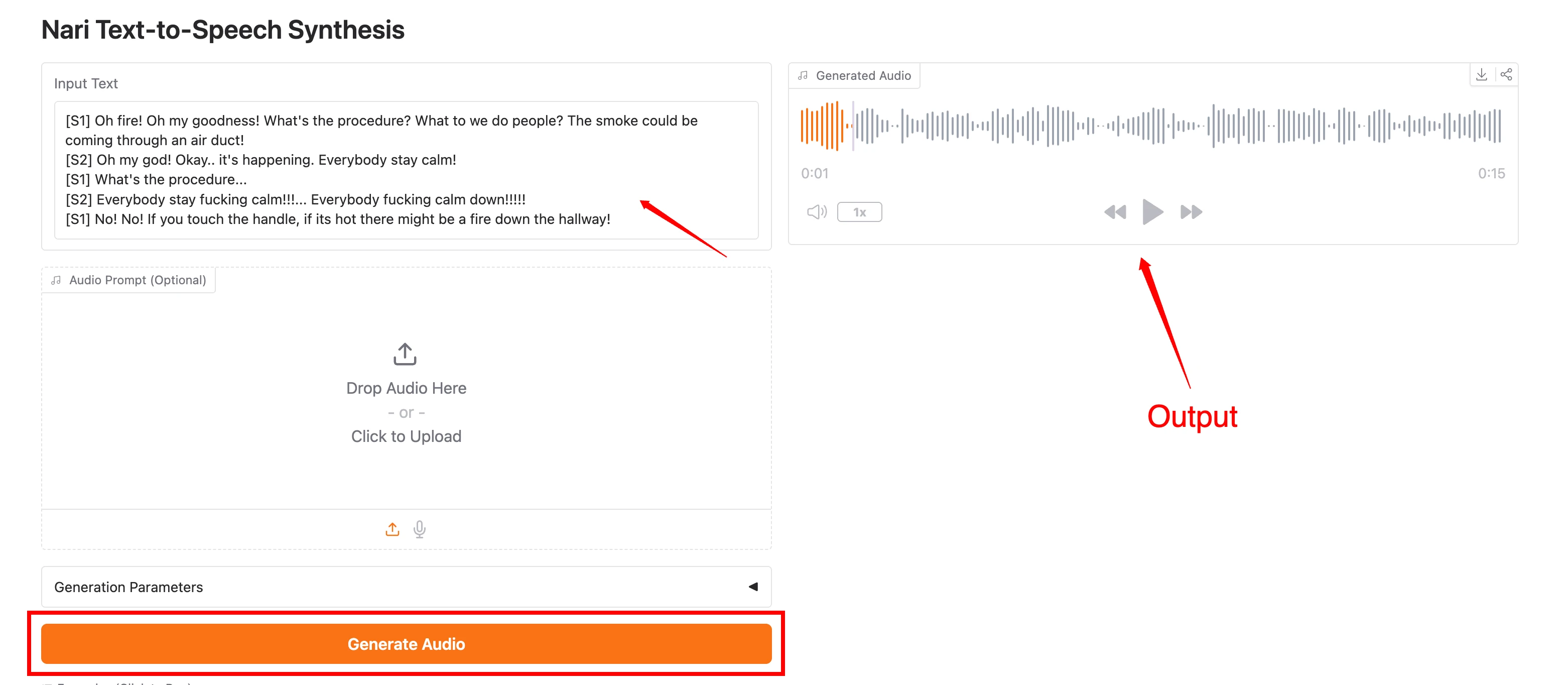

- You’ll see a default input text field.

- This comes preloaded with a script that's considered the "best sample."

- Let’s try running that and see how it performs.

Running the Default Text Sample

- I left the input as it was.

- Clicked the Generate button.

- Waited around 17 seconds — although the screen claimed 24 seconds.

Output:

“Dia is an open weights text-to-dialogue model. You get full control over scripts and voices. Try it now on GitHub or Hugging Face.”

Honestly, it sounded really good. If every output was like this, I’d be thrilled.

But that’s where the good part ends.

Real Test: Using Custom Text

- I copied a random piece of text.

- Pasted it into the input box.

- Ran the model again.

Time Taken: Again, around 17 seconds.

Voice Output: The result was okay, but I immediately noticed a few problems:

- Inconsistent voice: The voice changed when I ran the same text again.

- Speaker switching: Sometimes the voice would shift mid-sentence.

This inconsistency can be very frustrating if you’re trying to maintain a steady tone or character across your audio project.

Voice Consistency Issues

The developers themselves admit this:

The model was not fine-tuned on a specific voice.

What this means is:

- You’ll likely get different voices even when using the same text.

- For speaker consistency, you’re advised to use:

- An audio prompt (guide not yet available).

- Or try fixing the random seed.

I did try running the same prompt twice, hoping it would keep the voice the same — but it didn’t.

Testing Again for Consistency

- Pasted the text again.

- Clicked Generate.

Again, the result came back in about 17 seconds.

But once more, the voice was different. Not drastically, but enough to break continuity.

This means, even if the model sounds decent, it doesn’t really support repeatability, which is crucial if you’re building long-form content or character-based narration.

Performance at the End of the Clip

- Towards the end of the audio, the speech sometimes speeds up unnaturally.

- It felt rushed, like the model was trying to finish early.

This change in speed breaks immersion and makes the audio feel low-quality, especially during longer paragraphs or scripts.

Trying Voice Cloning: Does It Work?

I also wanted to test out the model's cloning ability. I had a short voice clip:

“Do you ever wonder how to win Dota games? Well, I got one word for you….”

I uploaded this and hoped the model would replicate that voice in its own generated speech.

Result:

- The voice it generated was nowhere near the original.

- It made some attempt — I could hear small similarities — but it was mostly a mismatch.

This means the model currently does not support accurate voice cloning.

Key Issues Identified

| Issue | Description |

|---|---|

| Voice inconsistency | The same text yields different voices each time. |

| No speaker control | It switches speakers mid-sentence. |

| Voice cloning fails | Uploaded voices are not replicated accurately. |

| Speed issue | The voice gets fast at the end of the audio. |

| No customization | Audio prompts or voice guides are not available yet. |

What Can You Actually Use It For?

While I wouldn't recommend this model for professional voice-over work or serious storytelling, it might still be useful for:

- Quick demos

- Idea prototyping

- Casual experimentation

But you’ll have to live with the inconsistencies.

How to Test It Yourself: Step-by-Step Guide

- Go to Hugging Face

- Visit Hugging Face Spaces

- Search for Nari Dia 1.6B

- Make sure you're logged in

- Try the Default Sample

- Let the default prompt remain

- Click Generate

- Wait around 17–24 seconds

- Listen to the result

- Use Custom Text

- Paste your own script

- Click Generate

- Listen and note the differences

- Test for Consistency

- Run the same text again

- See if the voice changes

- Repeat a few times

- Test Cloning (Optional)

- Upload a short audio clip of your own voice

- See how close the generated voice matches

My Final Verdict

The Nari Dia 1.6B model is promoted heavily, and yes — the default sample sounds great. But once you start experimenting with your own scripts, the flaws start to appear.

- The voice sounds natural in some samples but is inconsistent overall.

- Cloning does not work well.

- There’s no reliable way to keep the same speaker voice.

- The ending part of the audio becomes unusually fast, affecting overall quality.