What is Dia AI by Nari Labs?

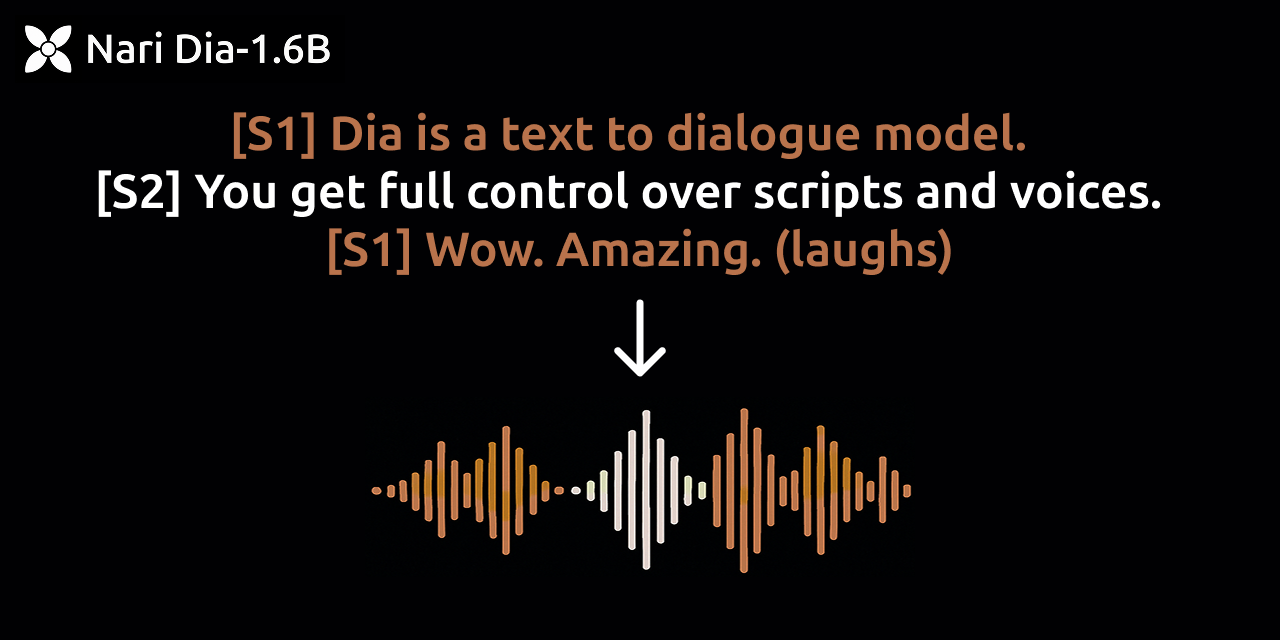

DIA-1.6B is a text-to-speech model designed to create highly realistic speech from a provided transcript. You can assign different speakers to different lines, making it feel like a real conversation.

Even more interesting, it’s capable of producing non-verbal sounds like:

- Laughter

- Coughing

- Clearing throat

Overview of Dia AI

| Feature | Details |

|---|---|

| Model Name | DIA-1.6B |

| Developer | Nari Labs |

| Size | ~6.5 GB |

| License | Apache 2.0 (open-source) |

| Requirements | ~10GB Video RAM (VRAM) |

| Non-verbal Generation | Laughter, Coughing, etc. |

| Interface | Gradio-based UI |

| Hosting | Hugging Face |

Requirements to Run DIA-1.6B

Before getting started, here’s what you’ll need:

- Around 10GB of Video RAM (VRAM)

- Basic Python environment

- Familiarity with Gradio interface

- Optional: Apple Silicon support (user reports)

Key Features of DIA-1.6B

Realistic Dialogue

Assign different speakers to different lines.

Non-verbal Sounds

Includes laughter, coughing, and throat clearing.

Simple Installation

Very beginner-friendly compared to many TTS models.

Open Source License

Apache 2.0 ensures flexibility and openness.

Cross-Platform Support

Some users even reported successful use on Apple Silicon devices.

Pros and Cons

Pros

- Fast video editing

- AI-powered workflow

- User-friendly interface

- Real-time preview

- No-code platform

Cons

- Limited customization options

- Requires internet connection

- Few export formats

How to Use DIA-1.6B

Setting up DIA-1.6B turned out to be surprisingly easy. Here's how you can get started.

Step 1: Install Necessary Packages

Ensure you have Python installed. Then run:

git clone https://github.com/nari-labs/DIA-TTS.git cd DIA-TTS pip install -r requirements.txtStep 2: Launch the Gradio UI

Run the script to start the Gradio interface:

python app.pyThe script will automatically download model weights (~6.5 GB) and set up the Gradio server. You’ll see a link in the terminal once ready.

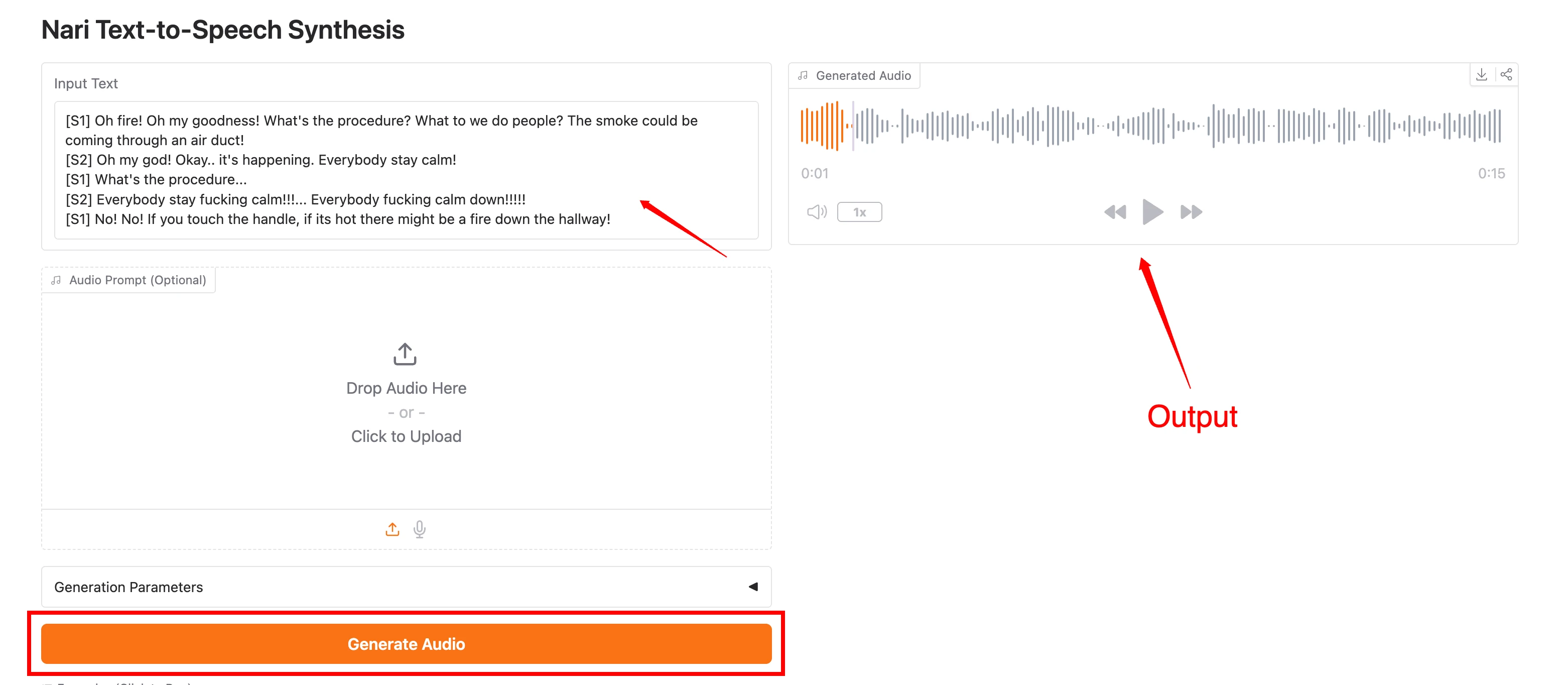

Step 3: Generate Dialogue

In the Gradio UI:

- Input your script.

- Assign speakers as S1, S2, etc.

- Click Generate Audio.

Local Installation Details

For local installation, you don't need to manually download the model files. Running the provided script will automatically fetch everything from Hugging Face.

Important notes during installation:

- Expect about 7.4 GB VRAM usage once the model is running.

- Full VRAM usage peaks around 10 GB during active generation.

- The model file downloads are automatic and straightforward.

I noticed the model even provides a sharable Gradio link if needed, though personally, I prefer using it locally for better control.

First Testing Experience

After launching the Gradio UI and loading the model, I was ready for my first test.

Here's what happened:

- Entered two lines assigned to Speaker 1 and Speaker 2.

- Clicked Generate Audio.

- VRAM spiked to around 10 GB.

- Within seconds, the model generated a fluid conversation.

Listen to the demo audio:

Setting Up the DIA TTS Model in Google Colab

Here’s the part most of you are here for: running the model yourself.

Let me walk you through the step-by-step setup using Colab.

Step 1: Connect to a T4 GPU in Google Colab

- Go to Runtime > Change runtime type.

- Select GPU and ensure the GPU type is T4.

- Click Save.

Step 2: Clone the DIA GitHub Repository

In your first code cell, run:

!git clone https://github.com/nari-labs/DIA-TTS.gitReplace <dia-repo-url> with the actual link from the DIA GitHub repository.

This pulls down the latest version of the model and related files.

Step 3: Install Required Dependencies

The model depends on the soundfile package, inspired by the SoundStorm paper. Run:

!pip install soundfileStep 4: Load the Model

Now we’re going to load the DIA 1.6B model. This part takes a few minutes since you’re loading 1.6B parameters into memory.

# Load the model weights (example placeholder)

from dia_tts import load_model

model = load_model("path_to_model_weights")Once loaded, the model is ready to take text input and generate audio output.

Testing the Model Output

Let’s see what it can do. Once the model is ready, try running the following:

text = "Hello, this is DIA — an open weights text-to-dialogue model."

audio = model.generate(text)You can save or play the audio like this:

import soundfile as sf

sf.write('output.wav', audio, 16000)Or use Colab to play it directly:

from IPython.display import Audio

Audio('output.wav')